A flight from New York to London often costs significantly more if you book it from an IP address in Manhattan compared to an IP address in Mumbai. A Google search for “best pizza” yields entirely different results in Tokyo than it does in Osaka.

The internet is not a uniform space. It changes based on where you stand.

For global businesses, data analysts, and developers, accessing the internet from a single location is no longer sufficient. You need to see what your customers see, exactly where they are.

This requires geo-targeted web scraping. Without it, you are viewing a generic, sterilized version of the web that does not reflect real-world market conditions.

To get accurate intelligence, you must virtually travel to the source.

Why Localized Data Collection Changes Everything Online

The internet is not static. Websites change content based on where they think you are located. This is known as “geo-blocking” or “geo-customization.”

If you scrape Amazon using a server in Germany, you get Euro pricing and European shipping estimates. If your target market is the United States, that data is useless.

Dynamic pricing is the biggest factor here. E-commerce platforms adjust costs based on demand, purchasing power, and local competition. Studies show online pricing varies by 15-30% depending on the user’s IP location.

Without hyper-local data collection, you face three major risks:

To solve this, you need residential proxies by country. These tools route your traffic through real devices in your target area, making your bot look like a local user.

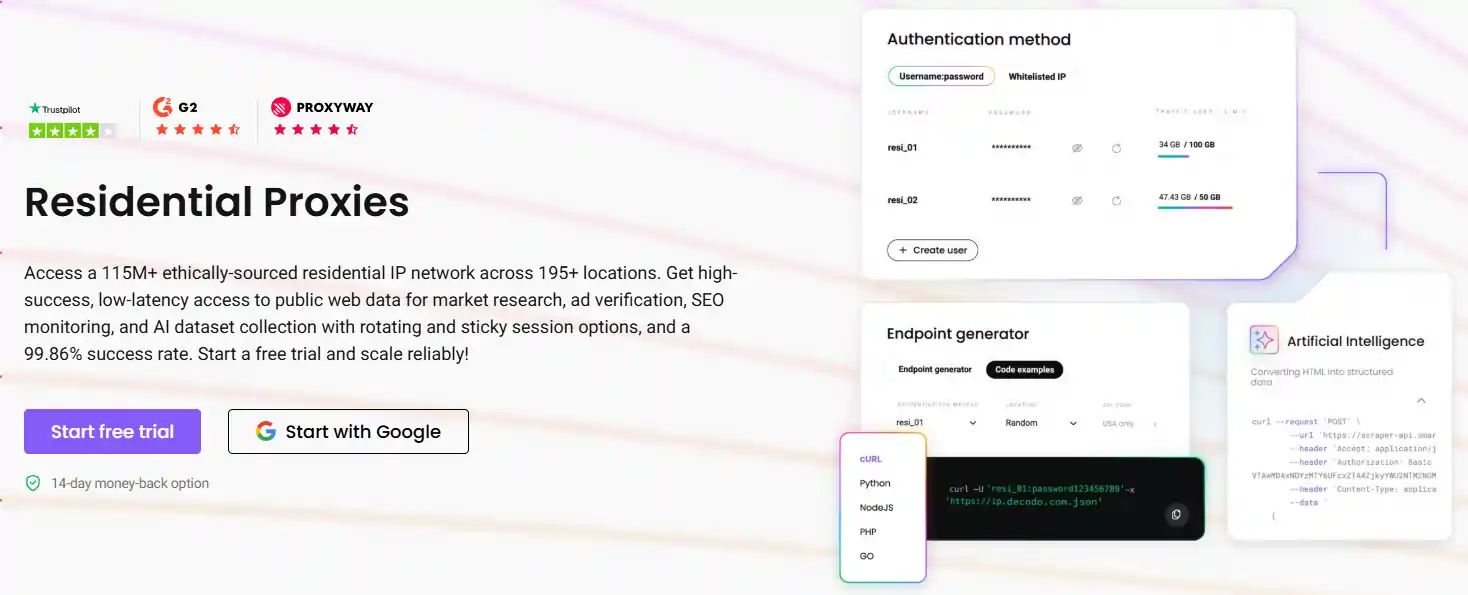

How Residential Proxies Enable Real Local Browsing

To make a scraper in London appear as if it’s browsing from Chicago, the solution is to use high-quality proxies, specifically residential proxies by country

Unlike datacenter proxies, which are easily flagged, residential proxies use IP addresses assigned to real devices (like home Wi-Fi) by Internet Service Providers (ISPs). This makes traffic look organic and human.

Advanced providers use a “Backconnect” architecture. Instead of managing thousands of individual proxy lists, you connect to a single gateway entry point. You control the exit location simply by modifying your username string.

This capability is essential for businesses that need to scrape local search results or verify ad placements in specific markets.

Decodo: Global Reach, Local Precision

When performing geo-targeted web scraping, coverage is everything. You cannot scrape flight prices in Brazil if your provider only has IPs in Europe.

Decodo offers an infrastructure built for this specific need.

This infrastructure is vital for tasks like Google Maps scraping, where proximity to the search location dictates the results.

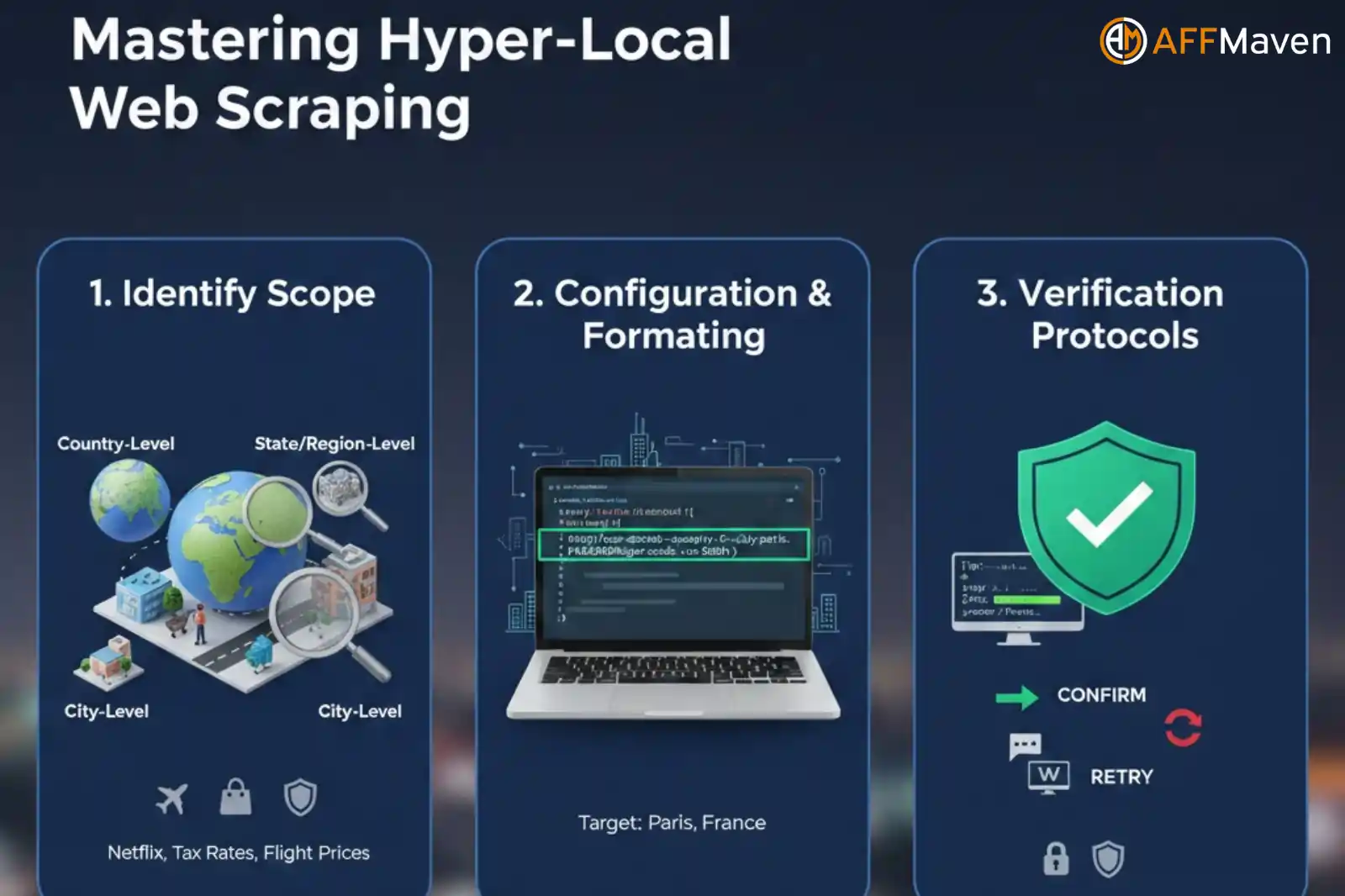

Step-by-Step Strategy for Localized Scraping

Implementing a successful location based scraping operation involves three critical phases. Following this structure ensures high success rates and data accuracy.

Step 1: Identify Your Scope

Before writing code, define the precision level your project demands.

Pro Tip: Do not pay for city-level precision if country-level suffices. However, for SEO and retail, city-level is usually required.

Step 2: Configuration and Formatting

Setting up web scraping with Decodo is straightforward. It does not require complex software installations. It works through standard proxy authentication protocols.

Here is how you format your request to target a specific location.

Concept: Instead of just sending username:password, you send username-country-TARGET:password.

Code Example (Python):

Here is how you format the proxy string for proxy city targeting:

python

import requests

# Target: Paris, France

# Format: user-decodo-country-[country_code]-city-[city_name]

proxies = {

"http": "http://user-decodo-country-fr-city-paris:[email protected]:8000",

"https": "http://user-decodo-country-fr-city-paris:[email protected]:8000",

}

url = "https://www.example.com"

response = requests.get(url, proxies=proxies)

print(response.text)By utilizing this method, you can loop through a list of cities—London, New York, Tokyo, Berlin—and collect the exact data a local resident sees in each metropolis.

Step 3: Verification Protocols

Trust is good; verification is better.

When running a script to scrape flight prices by location, you cannot afford to guess if the proxy rotation worked. You must confirm it.

Before your scraper hits the target website (like an airline or retailer), it should make a preliminary call to a verification API. Services like ipinfo.io or whoer.net return JSON data showing your current public IP and its estimated location.

Implementing a Check

Program your scraper to hit the verification API first.

This step ensures that your hyper-local data collection remains uncontaminated by incorrect geo-locations.

Advanced Tactics: Handling Large-Scale Geo-Data

When scaling localized scraping, you encounter unique challenges. Managing sessions and IP rotation becomes critical.

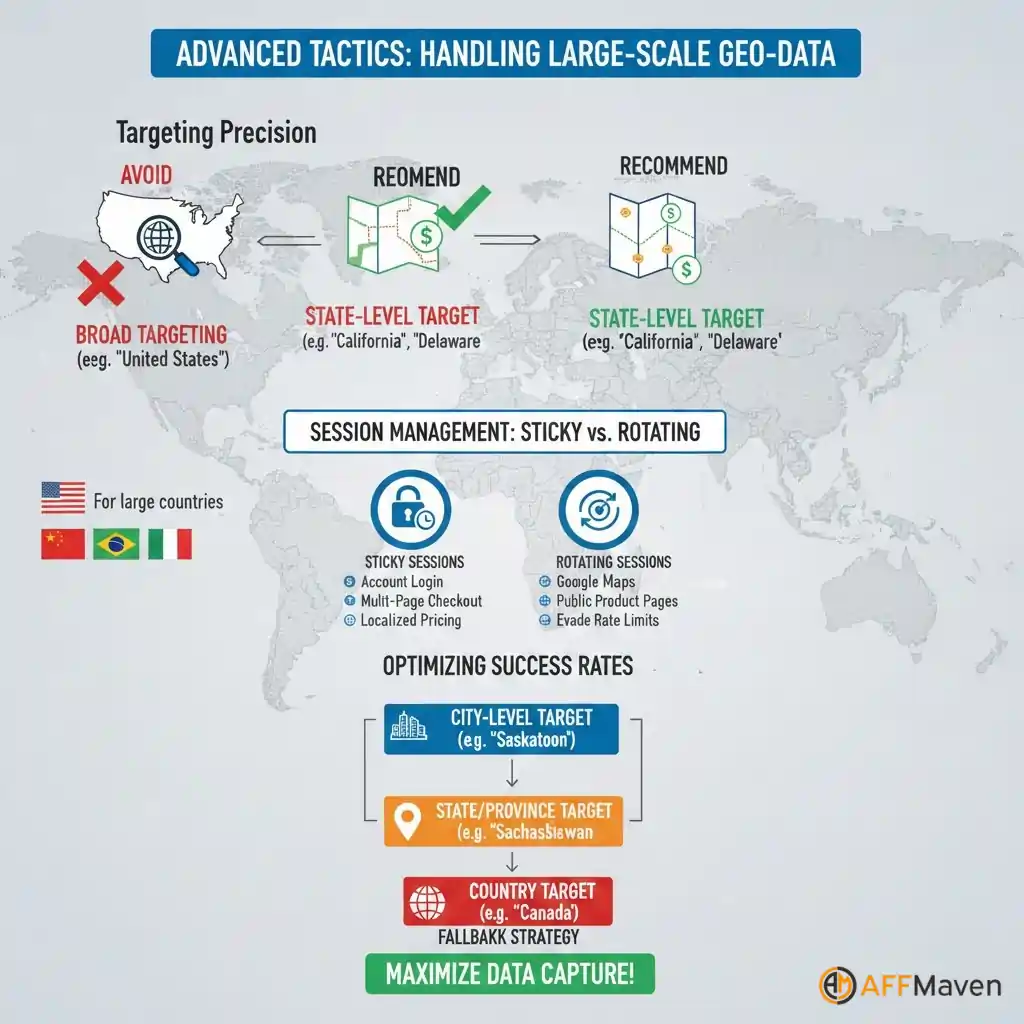

Avoid Broad Targeting for Specific Data

A common mistake is using “United States” generic targeting when you need precise tax rates or shipping costs.

California has different sales tax rules than Delaware. If you use a generic US proxy, you might get an IP in Delaware and miss the tax data entirely. Always use State-level targeting for large countries like the US, India, Brazil, or China.

Sticky vs. Rotating Sessions

Decodo allows you to choose between sticky sessions (keeping the same IP for a set time) and rotating sessions (new IP per request).

- Use Rotating Sessions: When scraping Google Maps results or public product pages. Rapidly changing IPs helps evade rate limits and captures data faster.

- Use Sticky Sessions: When logging into accounts or navigating through a multi-page checkout flow to scrape localized pricing. Changing IPs mid-session can trigger security alerts.

Optimizing for Success Rates

Sometimes, a specific city might have fewer active peers online. If you strictly request “Saskatoon, Canada” and the pool is tight, requests might fail.

Strategy: Start with strict city-level proxy targeting. If requests time out, configure your code to fall back to State/Province targeting, and finally to Country targeting. This cascade ensures you get data even if the exact city node is temporarily unavailable.

Turn Global Data into Local Market Intelligence

Data accuracy depends on context. A price is only accurate if you know where that price applies. A search ranking is only real if you know who is seeing it.

Decodo provides the infrastructure to answer these questions. With a pool covering 195 locations and the ability to drill down to specific cities, we eliminate geographic blind spots.

Don’t settle for generic global averages. Use geo web scraping to see the market as it truly exists. No matter if you need to monitor residential proxies by country for compliance or execute deep city-level targeting for pricing intelligence, the capability is available.

You no longer need to guess what the local market looks like. You can simply be there.

Affiliate Disclosure: This post may contain some affiliate links, which means we may receive a commission if you purchase something that we recommend at no additional cost for you (none whatsoever!)